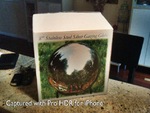

If your looking for a cheap mirrored ball you can buy an 8" Stainless Steel Gazing Ball here at Amazon.com for about $22. They also have smaller 6 " Silver Gazing Globe

at Amazon.com for about $22. They also have smaller 6 " Silver Gazing Globe  for about $14. It can easily be drilled and mounted to pipe or dowel so that it can be clamped to a c stand otr a tripod.

for about $14. It can easily be drilled and mounted to pipe or dowel so that it can be clamped to a c stand otr a tripod.

First, Im no Paul Debevec. Im not even smart enough to be his retarted intern. But I thought I'd share my technique for making HDRI's. My point being, I might not be getting this all 100% correct, but I like my results. Please don't get all over my shit if I say something a little off. Matter of fact, if you find a better way to do something, please let me know. I write this to share with the CG community and I would hope that people will share back with constructive criticism.

First, let's start by clearing something up. HDRIs are like ATMs. You don't have an ATM machine. That would be an Automatic Teller Machine Machine (ATMM?) See, there's two machines in that sentence now. The same is true of an HDRI. You can't have an HDRI Image. That would be an High Dynamic Range Image Image. But you can have an HDR image. Or many HDRIs. If your gonna talk like a geek, at least respect the acronym.

I use the mirrored ball technique and a panoramic technique for capturing HDRI's. Which one really depends on the situation and the equiptment you have. Mirrored balls are a great HDR tool, but a panoramic hdr is that much better since it captures all 360 degress of the lighting. However panoramic lens and mounts aren't cheap, and mirrored balls are very cheap.

Shooting Mirrored Ball Images

I've been shooting mirrored balls for many years now. Mirrored balls work pretty damn good for capturing much of a scene's lighting. Many times on a set, all the lights are comming from behind the camera, and the mirrored ball will capture these lights nicely. Where a mirrored ball starts to break down is when lighting is comming from behind the subject. It will capture some lights behind the ball, but since that light is only showing up in the very deformed edges of the mirrored ball, it's not gonna be that acurate for doing good rim lighting.

However, mirrored balls are cheap and easily available. You can get a garden gazing ball, or just a chrome christmas ornament and start taking HDR images today. Our smallest mirrored ball is one othe those chinese meditation balls with the bells in it. (You can get all zen playing with your HDRI balls.)

My balls are different sizes, (Isn't everyones?) and I have 3. I use several different sizes depending on the situation. I have a 12" ball for large set live action shoots, and a 1.5" ball for very small miniatures. With small stop motion sets, there isn't alot of room to work. The small 1.5" ball works great for that reason. I'll usually clamp it to a C-stand and hand it out over the set where the character will be. I also have a 4" ball that i can use for larger miniatures, or smaller live sets.

My balls are different sizes, (Isn't everyones?) and I have 3. I use several different sizes depending on the situation. I have a 12" ball for large set live action shoots, and a 1.5" ball for very small miniatures. With small stop motion sets, there isn't alot of room to work. The small 1.5" ball works great for that reason. I'll usually clamp it to a C-stand and hand it out over the set where the character will be. I also have a 4" ball that i can use for larger miniatures, or smaller live sets.

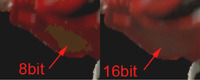

With eveything set up like we've mentioned, I like to find the darkest exposure I can and expose it all the way up to being blown out. I often skip 2-3 exposure brackets in between shots to keep the number of files down. You can make an HDRI from only 3 exposures, but I like to get anywhere from 5-8 different exposures.

When shooting a mirrored ball, shoot the ball as close to where the CG subject will be. Don't freak out about it if you can't get exactly where the CG will be, but try to get it as close as you can. If the CG subject will move around a lot in the shot, than place the ball in an average position.

Shoot the ball from the movie plate camera angle. Meaning set up your mirrored ball camera where the original plate camera was. This way, you'll always know that the HDRI will align to the back plate. Usually, on set, they will start moving the main camera out of the way as soon as the shot is done. I've learned to ask the lights to be left on for 3 minutes. (5 min sounds too long on a live set, I love asking for 3 minutes since it sounds like less.) Take your mirrored ball photos right after the shot is done. Make nice nice with the director of photography on set. Tell him how great it looks and that you really hope to capture all the lighting that's been done.

Don't get caught up with little scratches being on your ball. They won't show up in your final image. Also don't worry about your own reflection being in the ball. You give off so little bounce light you won't even register in the final scene. (Unless your blocking a major light on the set from the ball.)

We use a Nikon D90 as our HDR camera. It saves raw NEF files and JPG files simultaneously which I use sort of as thumbnail images of the raw files. I'm on the fence about using raw NEF files over the JPG's since you end up blending 6-8 of them together. I wonder if it really matters to use the raw files, but I always use them just in case it does really matter.

as our HDR camera. It saves raw NEF files and JPG files simultaneously which I use sort of as thumbnail images of the raw files. I'm on the fence about using raw NEF files over the JPG's since you end up blending 6-8 of them together. I wonder if it really matters to use the raw files, but I always use them just in case it does really matter.

To process your mirrored ball HDR image, you can use a bunch of dirrerent programs, but I just stick wtih any recent version of Photoshop. I'm not holding your hand on this step. Photoshop and Bridge have an automated tool for proceesing files to make an HDR. Follow those procedures and you'l be fine. You could also use HDR Shop 1.0 to make your HDR images. It's still out there for free and is a very useful tool. I talk about it later when making the panoramic HDRI's.

To process your mirrored ball HDR image, you can use a bunch of dirrerent programs, but I just stick wtih any recent version of Photoshop. I'm not holding your hand on this step. Photoshop and Bridge have an automated tool for proceesing files to make an HDR. Follow those procedures and you'l be fine. You could also use HDR Shop 1.0 to make your HDR images. It's still out there for free and is a very useful tool. I talk about it later when making the panoramic HDRI's.

Shooting Panoramic Images

The other technique is the panoramic HDRI. This is a little more involved and requires some equiptment. With this method, I shoot 360 degrees from the CG subject's location with a fish-eye lens, and use that to get a cylindrical panoramic view of the scene. With this set up you get a more complete picture of the lighting since you can now see 360 degrees without major distortions. However, it's not practicle to put a big panoramic swivel head on a miniature set. I usually use small meditation balls for that. Panoramic HDRI's are better for live action locations where you have the room for the tripod and spherical mount. To make a full panoramic image you'll need two things. A fisheye lens and a swivel mount.

First, you'll need something that can take a very wide angle image. For this I use the Sigma 4.5mm f/2.8 EX DC HSM Circular Fisheye Lens for Nikon Digital SLR Cameras

First, you'll need something that can take a very wide angle image. For this I use the Sigma 4.5mm f/2.8 EX DC HSM Circular Fisheye Lens for Nikon Digital SLR Cameras ($899) Images taken with this lens will be small and round and capture about 180 degrees. (A cheaper option might be something like a converter fish-eye lenses

($899) Images taken with this lens will be small and round and capture about 180 degrees. (A cheaper option might be something like a converter fish-eye lenses

, but you'll have to do your own research on those before buying one.)

, but you'll have to do your own research on those before buying one.)

You'll need a way to take several of these images in a circle, pivoted about the lens. We want to pivot around the lens so that there will be minimum paralax distortion. With a very wide lens, moving the slightest bit can make the images very different and not align for our HDRI later. To do this, I bought a Manfrotto 303SPH QTVR Spherical Panoramic Pro Head (Black)

You'll need a way to take several of these images in a circle, pivoted about the lens. We want to pivot around the lens so that there will be minimum paralax distortion. With a very wide lens, moving the slightest bit can make the images very different and not align for our HDRI later. To do this, I bought a Manfrotto 303SPH QTVR Spherical Panoramic Pro Head (Black) that we can mount to any tripod. This head can swivel almost 360 degrees. A step down from this is the Manfrotto 303PLUS QTVR Precision Panoramic Pro Head (Black)

that we can mount to any tripod. This head can swivel almost 360 degrees. A step down from this is the Manfrotto 303PLUS QTVR Precision Panoramic Pro Head (Black) which doesn't allow 360 degrees of swivel, but with the 4.5 fish eye lens, I found you don't really need to tilt up or down to get the sky and ground, you'll get it by just panning the head around.

which doesn't allow 360 degrees of swivel, but with the 4.5 fish eye lens, I found you don't really need to tilt up or down to get the sky and ground, you'll get it by just panning the head around.

Once you got all that, time to shoot your panoramic location. You'll want to set up the head so that the center of the lens is floating right over the center of the mount. Now in theory, this lens can take a 180 degree image so you only need front and back right? Wrong. You'll want some overlap so take 3 sets of images for our panorama each 120 degrees apart. 0, 120, and 240. That will give us the coverage we need to stitch up the image later.

Just like the mirrored ball, I like to shoot the the image back at the direction of the plate camera. Set up the tripod so that 120 degrees at pointing towards the original camera position. Then rotate back to 0 and start taking your mutiple exposures. Once 0 is taken, rotate to 120, and again to 240 degrees. When we stitch this all together, the 120 position will be in the center of the image and the seam of the image will be at the back where 0 and 240 blend.

People walking through your images. Especially on a live action set. There is no time on set to wait for perfect conditions. By the time you blend all your exposures together that person will disapear. Check out the Forest_Ball.hdr image. You can see me taking the photos, and a ghost in a yellow shirt on the right side.

Processing The Panoramic Images

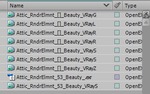

To build the paroramic from your images, you'll need to go through three steps. 1. Make the HDR images (just like for the mirrored ball). 2. Transform the round fish-eye images to square latitude/logitude images, and 3. Stitch it all back together in a cylindrical panoramic image.

Like we talked about before, Adobe Bridge can easily take a set of different exposures and make an HDR out of them. Grab a set, and go to the menu under Tools/Photoshop/Merge to HDR. Do this for each of your 0, 120 and 240 degree images and save them out.

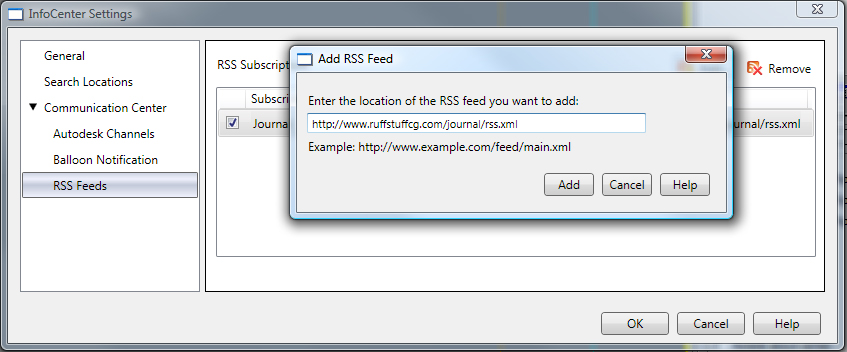

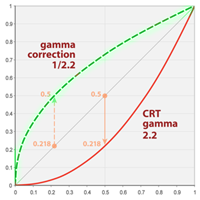

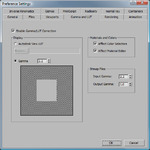

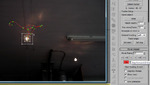

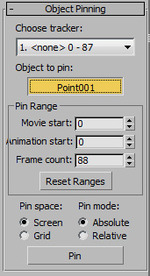

Photoshop doesn't have any tool for distorting a fish-eye lens to a Lat/Long image. There are some programs that I investigated, but they all cost money. I like free. So to do this, grab a copy of HDR Shop 1.0 Open up each image inside HDRshop and go to menu Image/Panorama/Panoramic Transformations. We set the source image to Mirrored Ball Closeup and the Destination Image to Latitude/Longitude. Then set the resolution in height to something close to the original height.

Photoshop doesn't have any tool for distorting a fish-eye lens to a Lat/Long image. There are some programs that I investigated, but they all cost money. I like free. So to do this, grab a copy of HDR Shop 1.0 Open up each image inside HDRshop and go to menu Image/Panorama/Panoramic Transformations. We set the source image to Mirrored Ball Closeup and the Destination Image to Latitude/Longitude. Then set the resolution in height to something close to the original height.

- Stitch It Together Using Photomerge

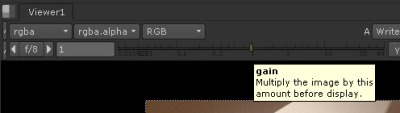

OK. You now have three square images that you have to stitch back together. Go back and open the three Lat/Long images in Photoshop. From here, you can stitch them together with Menu-Automate/Photomerge using the "Interactive Layout" option. This next window will place all three images into an area where you can re-arrange them how you want. Once you have something that looks ok, press OK and it will make a layered Photoshop file with each layer having an automatically created mask. Next, I adjusted the exposure of one of the images,

OK. You now have three square images that you have to stitch back together. Go back and open the three Lat/Long images in Photoshop. From here, you can stitch them together with Menu-Automate/Photomerge using the "Interactive Layout" option. This next window will place all three images into an area where you can re-arrange them how you want. Once you have something that looks ok, press OK and it will make a layered Photoshop file with each layer having an automatically created mask. Next, I adjusted the exposure of one of the images,  and you can make changes to the masks also. As you can see with my image on the right, when they stiched up, each one was a little lower than the next. This tells me that my tripod was not totally level when I took my pictures. I finallized my image by collapsing it all ot one layer, and rotating it a few degrees so the horizon was back to being level. For the seam in the back, you can do a quick offset and a clone stamp, or just leave it alone.

and you can make changes to the masks also. As you can see with my image on the right, when they stiched up, each one was a little lower than the next. This tells me that my tripod was not totally level when I took my pictures. I finallized my image by collapsing it all ot one layer, and rotating it a few degrees so the horizon was back to being level. For the seam in the back, you can do a quick offset and a clone stamp, or just leave it alone.

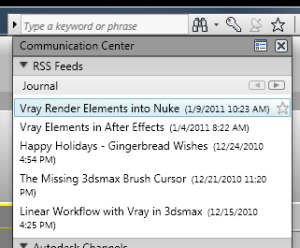

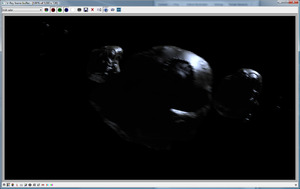

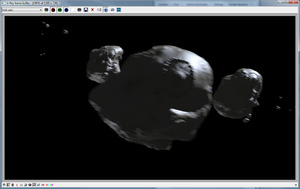

This topic is huge and I can only cover so much in this first post. Next week, I'll finish this off by talking about how I use HDR images within my vRay workflow and how to customize your HDR images so that you can tweak the final render to exactly what you want. Keep in mind that HDR images are just a tool for you to make great looking CG. For now here are two HDRI's and a background plate that I've posted in my download section.

Park_Panorama.hdr Forest_Ball.hdr Forest_Plate.jpg

If your looking for a cheap mirrored ball you can get one here at Amazon.com. It's only like 7$!

at Amazon.com. It's only like 7$!

Tuesday, March 15, 2011 at 1:27AM

Tuesday, March 15, 2011 at 1:27AM  I just feel sorry for who ever has to put them together.)

I just feel sorry for who ever has to put them together.) Lunch at Pixar is awesome.

Lunch at Pixar is awesome.  Back when I lived in SF I jumped at any chance to go have lunch over there. The food is great and it's real inexpensive. They always have daily specials and they make pasta and pizzas to order.

Back when I lived in SF I jumped at any chance to go have lunch over there. The food is great and it's real inexpensive. They always have daily specials and they make pasta and pizzas to order.