Happy Holidays - Gingerbread Wishes

Friday, December 23, 2011 at 4:06PM

Friday, December 23, 2011 at 4:06PM Happy Holidays!

Just like they replay Rankin and Bass each year, I'll post gingerbread wishes again this year. Have a great holiday everyone, no matter what it is.

Friday, December 23, 2011 at 4:06PM

Friday, December 23, 2011 at 4:06PM Happy Holidays!

Just like they replay Rankin and Bass each year, I'll post gingerbread wishes again this year. Have a great holiday everyone, no matter what it is.

CGI

CGI  Wednesday, September 14, 2011 at 2:31PM

Wednesday, September 14, 2011 at 2:31PM I helped my friend Josh Cox with a shot or two on his debut music video. The song is called "Bury Us Alive" by STRFKR. Thiago Costa did the massive particle simulations at the end.

Monday, August 22, 2011 at 11:23AM

Monday, August 22, 2011 at 11:23AM I took some redcam footage of the sellwood bridge fromt he east side, and I removed most of the bridge so i could have it collapse, caused by an inial explosion at the concrete pilon. here are a couple of the tests.

First test where everything just falls all at once.

Here I've now got things timed out, but I need to remove the bounciness of the bridge and make it fall apart more.

Worked on the sim more and started working with Fume to see what I get. A good start, but much more work is needed.

Thursday, July 14, 2011 at 10:46PM

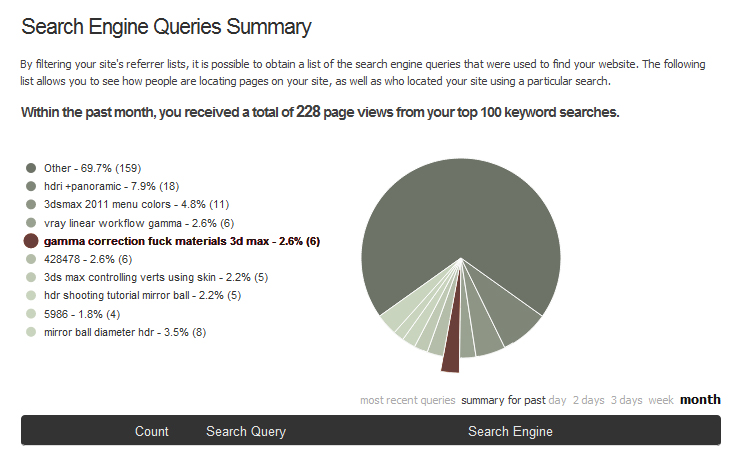

Thursday, July 14, 2011 at 10:46PM I looked at what people are searching for when they end up at my blog and I found one of todays top search terms to be insightful.

I think it was this guy I work with. I could see him typing that in his browser.

Monday, March 14, 2011 at 11:29AM

Monday, March 14, 2011 at 11:29AM If your looking for a cheap mirrored ball you can buy an 8" Stainless Steel Gazing Ball here at Amazon.com for about $22. They also have smaller 6 " Silver Gazing Globe

for about $14. It can easily be drilled and mounted to pipe or dowel so that it can be clamped to a c stand otr a tripod.

First, Im no Paul Debevec. Im not even smart enough to be his retarted intern. But I thought I'd share my technique for making HDRI's. My point being, I might not be getting this all 100% correct, but I like my results. Please don't get all over my shit if I say something a little off. Matter of fact, if you find a better way to do something, please let me know. I write this to share with the CG community and I would hope that people will share back with constructive criticism.

First, let's start by clearing something up. HDRIs are like ATMs. You don't have an ATM machine. That would be an Automatic Teller Machine Machine (ATMM?) See, there's two machines in that sentence now. The same is true of an HDRI. You can't have an HDRI Image. That would be an High Dynamic Range Image Image. But you can have an HDR image. Or many HDRIs. If your gonna talk like a geek, at least respect the acronym.

I use the mirrored ball technique and a panoramic technique for capturing HDRI's. Which one really depends on the situation and the equiptment you have. Mirrored balls are a great HDR tool, but a panoramic hdr is that much better since it captures all 360 degress of the lighting. However panoramic lens and mounts aren't cheap, and mirrored balls are very cheap.

Shooting Mirrored Ball Images

I've been shooting mirrored balls for many years now. Mirrored balls work pretty damn good for capturing much of a scene's lighting. Many times on a set, all the lights are comming from behind the camera, and the mirrored ball will capture these lights nicely. Where a mirrored ball starts to break down is when lighting is comming from behind the subject. It will capture some lights behind the ball, but since that light is only showing up in the very deformed edges of the mirrored ball, it's not gonna be that acurate for doing good rim lighting.

However, mirrored balls are cheap and easily available. You can get a garden gazing ball, or just a chrome christmas ornament and start taking HDR images today. Our smallest mirrored ball is one othe those chinese meditation balls with the bells in it. (You can get all zen playing with your HDRI balls.)

My balls are different sizes, (Isn't everyones?) and I have 3. I use several different sizes depending on the situation. I have a 12" ball for large set live action shoots, and a 1.5" ball for very small miniatures. With small stop motion sets, there isn't alot of room to work. The small 1.5" ball works great for that reason. I'll usually clamp it to a C-stand and hand it out over the set where the character will be. I also have a 4" ball that i can use for larger miniatures, or smaller live sets.

With eveything set up like we've mentioned, I like to find the darkest exposure I can and expose it all the way up to being blown out. I often skip 2-3 exposure brackets in between shots to keep the number of files down. You can make an HDRI from only 3 exposures, but I like to get anywhere from 5-8 different exposures.

When shooting a mirrored ball, shoot the ball as close to where the CG subject will be. Don't freak out about it if you can't get exactly where the CG will be, but try to get it as close as you can. If the CG subject will move around a lot in the shot, than place the ball in an average position.

Shoot the ball from the movie plate camera angle. Meaning set up your mirrored ball camera where the original plate camera was. This way, you'll always know that the HDRI will align to the back plate. Usually, on set, they will start moving the main camera out of the way as soon as the shot is done. I've learned to ask the lights to be left on for 3 minutes. (5 min sounds too long on a live set, I love asking for 3 minutes since it sounds like less.) Take your mirrored ball photos right after the shot is done. Make nice nice with the director of photography on set. Tell him how great it looks and that you really hope to capture all the lighting that's been done.

Don't get caught up with little scratches being on your ball. They won't show up in your final image. Also don't worry about your own reflection being in the ball. You give off so little bounce light you won't even register in the final scene. (Unless your blocking a major light on the set from the ball.)

We use a Nikon D90 as our HDR camera. It saves raw NEF files and JPG files simultaneously which I use sort of as thumbnail images of the raw files. I'm on the fence about using raw NEF files over the JPG's since you end up blending 6-8 of them together. I wonder if it really matters to use the raw files, but I always use them just in case it does really matter.

To process your mirrored ball HDR image, you can use a bunch of dirrerent programs, but I just stick wtih any recent version of Photoshop. I'm not holding your hand on this step. Photoshop and Bridge have an automated tool for proceesing files to make an HDR. Follow those procedures and you'l be fine. You could also use HDR Shop 1.0 to make your HDR images. It's still out there for free and is a very useful tool. I talk about it later when making the panoramic HDRI's.

To process your mirrored ball HDR image, you can use a bunch of dirrerent programs, but I just stick wtih any recent version of Photoshop. I'm not holding your hand on this step. Photoshop and Bridge have an automated tool for proceesing files to make an HDR. Follow those procedures and you'l be fine. You could also use HDR Shop 1.0 to make your HDR images. It's still out there for free and is a very useful tool. I talk about it later when making the panoramic HDRI's.

Shooting Panoramic Images

The other technique is the panoramic HDRI. This is a little more involved and requires some equiptment. With this method, I shoot 360 degrees from the CG subject's location with a fish-eye lens, and use that to get a cylindrical panoramic view of the scene. With this set up you get a more complete picture of the lighting since you can now see 360 degrees without major distortions. However, it's not practicle to put a big panoramic swivel head on a miniature set. I usually use small meditation balls for that. Panoramic HDRI's are better for live action locations where you have the room for the tripod and spherical mount. To make a full panoramic image you'll need two things. A fisheye lens and a swivel mount.

First, you'll need something that can take a very wide angle image. For this I use the Sigma 4.5mm f/2.8 EX DC HSM Circular Fisheye Lens for Nikon Digital SLR Cameras

First, you'll need something that can take a very wide angle image. For this I use the Sigma 4.5mm f/2.8 EX DC HSM Circular Fisheye Lens for Nikon Digital SLR Cameras ($899) Images taken with this lens will be small and round and capture about 180 degrees. (A cheaper option might be something like a converter fish-eye lenses

, but you'll have to do your own research on those before buying one.)

You'll need a way to take several of these images in a circle, pivoted about the lens. We want to pivot around the lens so that there will be minimum paralax distortion. With a very wide lens, moving the slightest bit can make the images very different and not align for our HDRI later. To do this, I bought a Manfrotto 303SPH QTVR Spherical Panoramic Pro Head (Black)

You'll need a way to take several of these images in a circle, pivoted about the lens. We want to pivot around the lens so that there will be minimum paralax distortion. With a very wide lens, moving the slightest bit can make the images very different and not align for our HDRI later. To do this, I bought a Manfrotto 303SPH QTVR Spherical Panoramic Pro Head (Black) that we can mount to any tripod. This head can swivel almost 360 degrees. A step down from this is the Manfrotto 303PLUS QTVR Precision Panoramic Pro Head (Black)

which doesn't allow 360 degrees of swivel, but with the 4.5 fish eye lens, I found you don't really need to tilt up or down to get the sky and ground, you'll get it by just panning the head around.

Once you got all that, time to shoot your panoramic location. You'll want to set up the head so that the center of the lens is floating right over the center of the mount. Now in theory, this lens can take a 180 degree image so you only need front and back right? Wrong. You'll want some overlap so take 3 sets of images for our panorama each 120 degrees apart. 0, 120, and 240. That will give us the coverage we need to stitch up the image later.

Just like the mirrored ball, I like to shoot the the image back at the direction of the plate camera. Set up the tripod so that 120 degrees at pointing towards the original camera position. Then rotate back to 0 and start taking your mutiple exposures. Once 0 is taken, rotate to 120, and again to 240 degrees. When we stitch this all together, the 120 position will be in the center of the image and the seam of the image will be at the back where 0 and 240 blend.

People walking through your images. Especially on a live action set. There is no time on set to wait for perfect conditions. By the time you blend all your exposures together that person will disapear. Check out the Forest_Ball.hdr image. You can see me taking the photos, and a ghost in a yellow shirt on the right side.

Processing The Panoramic Images

To build the paroramic from your images, you'll need to go through three steps. 1. Make the HDR images (just like for the mirrored ball). 2. Transform the round fish-eye images to square latitude/logitude images, and 3. Stitch it all back together in a cylindrical panoramic image.

Like we talked about before, Adobe Bridge can easily take a set of different exposures and make an HDR out of them. Grab a set, and go to the menu under Tools/Photoshop/Merge to HDR. Do this for each of your 0, 120 and 240 degree images and save them out.

Photoshop doesn't have any tool for distorting a fish-eye lens to a Lat/Long image. There are some programs that I investigated, but they all cost money. I like free. So to do this, grab a copy of HDR Shop 1.0 Open up each image inside HDRshop and go to menu Image/Panorama/Panoramic Transformations. We set the source image to Mirrored Ball Closeup and the Destination Image to Latitude/Longitude. Then set the resolution in height to something close to the original height.

Photoshop doesn't have any tool for distorting a fish-eye lens to a Lat/Long image. There are some programs that I investigated, but they all cost money. I like free. So to do this, grab a copy of HDR Shop 1.0 Open up each image inside HDRshop and go to menu Image/Panorama/Panoramic Transformations. We set the source image to Mirrored Ball Closeup and the Destination Image to Latitude/Longitude. Then set the resolution in height to something close to the original height.

OK. You now have three square images that you have to stitch back together. Go back and open the three Lat/Long images in Photoshop. From here, you can stitch them together with Menu-Automate/Photomerge using the "Interactive Layout" option. This next window will place all three images into an area where you can re-arrange them how you want. Once you have something that looks ok, press OK and it will make a layered Photoshop file with each layer having an automatically created mask. Next, I adjusted the exposure of one of the images,

OK. You now have three square images that you have to stitch back together. Go back and open the three Lat/Long images in Photoshop. From here, you can stitch them together with Menu-Automate/Photomerge using the "Interactive Layout" option. This next window will place all three images into an area where you can re-arrange them how you want. Once you have something that looks ok, press OK and it will make a layered Photoshop file with each layer having an automatically created mask. Next, I adjusted the exposure of one of the images,  and you can make changes to the masks also. As you can see with my image on the right, when they stiched up, each one was a little lower than the next. This tells me that my tripod was not totally level when I took my pictures. I finallized my image by collapsing it all ot one layer, and rotating it a few degrees so the horizon was back to being level. For the seam in the back, you can do a quick offset and a clone stamp, or just leave it alone.

and you can make changes to the masks also. As you can see with my image on the right, when they stiched up, each one was a little lower than the next. This tells me that my tripod was not totally level when I took my pictures. I finallized my image by collapsing it all ot one layer, and rotating it a few degrees so the horizon was back to being level. For the seam in the back, you can do a quick offset and a clone stamp, or just leave it alone.

This topic is huge and I can only cover so much in this first post. Next week, I'll finish this off by talking about how I use HDR images within my vRay workflow and how to customize your HDR images so that you can tweak the final render to exactly what you want. Keep in mind that HDR images are just a tool for you to make great looking CG. For now here are two HDRI's and a background plate that I've posted in my download section.

Park_Panorama.hdr Forest_Ball.hdr Forest_Plate.jpg

If your looking for a cheap mirrored ball you can get one here at Amazon.com. It's only like 7$!

Monday, February 21, 2011 at 5:46PM

Monday, February 21, 2011 at 5:46PM Hey everybody, I decided that I would try doing some video blog entries on some of the topics I've been going over. In this first one, Im going over the stuff I talked about with spring simulations. I was tired when I recorded this so cut me some slack. I'm keeping these quick and dirty, designed to just get you going with a concept or feature, not hold your hand the whole way through.

The original posted article on spring simulations with Flex is posted here.

Wednesday, February 2, 2011 at 2:30AM

Wednesday, February 2, 2011 at 2:30AM Ever wish you could create a soft body simulation on your mesh to bounce it around? 3ds max has had this ability for many years now, but it's tricky to set up and not very obvious that it can be done at all. (This feature could use a re-vamp in an upcoming release.) I pull this trick out whenever I can, so I thought I'd go over it for all my readers. It's called the Flex modifier, and it can do more than you realize.

A Breif History of Flex

The flex modifier, originally debuted in 1999 within 3D Studio Max R3. I'm pretty sure Peter Watje based it on a tool within Softimage 3d called quick stretch. (The original Softimage, not XSI) The basic flex modifier is cool, but the results are less than realistic. In a later release, Peter added the ability for flex to use springs connected between each vertex. With enough springs in place, meshes will try and hold their shape and jiggle with motion applied to them.

The flex modifier, originally debuted in 1999 within 3D Studio Max R3. I'm pretty sure Peter Watje based it on a tool within Softimage 3d called quick stretch. (The original Softimage, not XSI) The basic flex modifier is cool, but the results are less than realistic. In a later release, Peter added the ability for flex to use springs connected between each vertex. With enough springs in place, meshes will try and hold their shape and jiggle with motion applied to them.

Making Flex into a Simulation Environment

So we're about to deal with a real-time spring system. Springs can do what we call "Explode". This means the math goes wrong and the vertices fly off into infinite space. Also, if you accidentally set up a couple thousand springs, your system will just come to a halt. So here are the setup rules to make a real time modifier more like a "system" for calculating the results...

Setting Up the Spring Simulation

Ok, I did this the other day on a real project, but I can't show that right now so yes, I'm gonna do it on a teapot, don't gimme shit for it. I would do it on an elephants trunk or something like that... wait a sec, I will do it on an elephant trunk! (Mom always said, a real world example is better than a teapot example.)

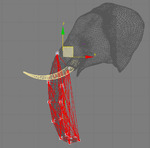

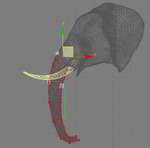

OK, Lets start with this elephants head. (this elephant model is probably from 1999 too!) I'lll create a simple mesh model to represent the elephants trunk. The detail here is important. I start with a simple model, and if the simulation collapses or looks funky, I'll go all the way back to the beginning and add more mesh, and re make all my springs. (I did that at the end of this tutorial.) First, lets disable the old method of Flex by turning off Chase Springs and Use Weights. Next, let's choose a simulation method.

OK, Lets start with this elephants head. (this elephant model is probably from 1999 too!) I'lll create a simple mesh model to represent the elephants trunk. The detail here is important. I start with a simple model, and if the simulation collapses or looks funky, I'll go all the way back to the beginning and add more mesh, and re make all my springs. (I did that at the end of this tutorial.) First, lets disable the old method of Flex by turning off Chase Springs and Use Weights. Next, let's choose a simulation method.

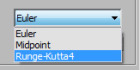

There are 3 different sim types. I couldn't tell you the difference. I do know that they get better and slower from top to bottom. With that said, set it to Runge-Kutta4 - the slowest and the most stable. (Slow is relative. in this example, it still gives me real time feedback.)

There are 3 different sim types. I couldn't tell you the difference. I do know that they get better and slower from top to bottom. With that said, set it to Runge-Kutta4 - the slowest and the most stable. (Slow is relative. in this example, it still gives me real time feedback.)

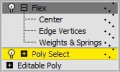

OK. Before we go making the springs, lets decide which vertices will be held in place and which will be free to be controlled by Flex. Go right below the Flex modifier and add a Poly Select modifier. Select the verts that you want to be free and leave the hold verts un-selected. By using the select modifier we can utilize the soft selection feature so that the effect has a nice falloff. Turn of soft selection and set your falloff.

OK. Before we go making the springs, lets decide which vertices will be held in place and which will be free to be controlled by Flex. Go right below the Flex modifier and add a Poly Select modifier. Select the verts that you want to be free and leave the hold verts un-selected. By using the select modifier we can utilize the soft selection feature so that the effect has a nice falloff. Turn of soft selection and set your falloff.

About the Spring Types

Now that we know which verts will be free and which will be held, lets set up the springs. Go to Weights and Springs sub-object. Open advanced rollout and turn on Show Springs. Now, there are 2 types of springs. One holds the verts together by Edge Lengths. These keep the edge length correct over the life of the sim. The other holds verts together that are not connected by edges. These are called Hold Shape Springs. I try to set up only as many springs as I need for the effect to work.

Now that we know which verts will be free and which will be held, lets set up the springs. Go to Weights and Springs sub-object. Open advanced rollout and turn on Show Springs. Now, there are 2 types of springs. One holds the verts together by Edge Lengths. These keep the edge length correct over the life of the sim. The other holds verts together that are not connected by edges. These are called Hold Shape Springs. I try to set up only as many springs as I need for the effect to work.

Making the Springs

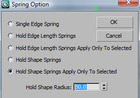

Bad SpringsTo make a spring, you have to select 2 or more vertices, decide which spring type you are adding in the options dialog, and press the Add Spring button. The options dialog has a radius "filter". By setting the radius, it will NOT make springs that are a certain distance from each other. This is useful when adding a lot of springs at once, but I try to be specific when adding springs. I first select ALL the vertices and set the dialog to Edge Length springs with a high radius. Then close the dialog and press Add Springs. This will make blue springs on top of all the polygons edges.

Bad SpringsTo make a spring, you have to select 2 or more vertices, decide which spring type you are adding in the options dialog, and press the Add Spring button. The options dialog has a radius "filter". By setting the radius, it will NOT make springs that are a certain distance from each other. This is useful when adding a lot of springs at once, but I try to be specific when adding springs. I first select ALL the vertices and set the dialog to Edge Length springs with a high radius. Then close the dialog and press Add Springs. This will make blue springs on top of all the polygons edges. Good Springs (In new releases, you cannot see these springs due to some weird bug.) After that, open the dialog again and choose shape springs to selected and then start adding shape springs. These are the more important springs anyway. You can select all your verts and try to use the radius to apply springs, but it might look something like the image to the left. If you select 2 "rings" of your mesh at a time and add springs carefully, it will look more like the one on the right. (NOTE: It's easy to over do the amount of springs. Deleting springs is hard to do since you have to select the 2 verts that represent the spring, don't be upset about it deleting all the springs and starting over.)

Good Springs (In new releases, you cannot see these springs due to some weird bug.) After that, open the dialog again and choose shape springs to selected and then start adding shape springs. These are the more important springs anyway. You can select all your verts and try to use the radius to apply springs, but it might look something like the image to the left. If you select 2 "rings" of your mesh at a time and add springs carefully, it will look more like the one on the right. (NOTE: It's easy to over do the amount of springs. Deleting springs is hard to do since you have to select the 2 verts that represent the spring, don't be upset about it deleting all the springs and starting over.)

When making your shape springs, you dont want to over do it. Too many springs can make it unstable. Also each spring sets up a constraint. Keep that in mind. If you do try to use the radius to filter the amount of springs, use the tape measure to measure the distance between the verts to know what you will get after adding springs.

Working with the Settings

In the rollout called "Simple Soft Bodies" there is 3 controls. A button that adds springs without controlling where, and a Stretch and Stiffness parameter. I don't recommend using the Create Simple Soft Body action. (Go ahead and try it to see what it does.) However, the other parameters still control the custom springs you make. Lets take a look at my first animation and see how we can make it better.

You know we can make it better? Take more than 20 seconds to animate that elephant head. What the hell Ruff? You can't even rig up a damn elephant head for this tutorial? Nope. 6 rotation keys is all you get. Anyway, the flex is a bit floaty huh? Looks more like liquid in outer space. We need the springs to be a lot stiffer. Turn the Stiffness parameter up to 10. Now let's take another look.

Better, but the top has too few springs to hold all this motion. It's twisting too much and doesn't look right.

Lets add some extra long springs to hold the upper part in place. To do this, instead of adding springs just between connected verts, we can select a larger span of verts and add a few more springs. This will result in a stiffer area at the top of the trunk. Now lets see the results of this. (NOTE: The image to the left has an overlay mode to show the extra springs added in light pink. See how they span more than one edge now.)

Lets add some extra long springs to hold the upper part in place. To do this, instead of adding springs just between connected verts, we can select a larger span of verts and add a few more springs. This will result in a stiffer area at the top of the trunk. Now lets see the results of this. (NOTE: The image to the left has an overlay mode to show the extra springs added in light pink. See how they span more than one edge now.)

Looking good. In my case here, I see the trunk folding in on itself. You can add springs to any set of vertices to hold things in place. The tip of the trunk flies around too much. I'lll create a couple new springs from the top all the way down to the tip. These springs will hold the overall shape in place without being too rigid.

Looking good. In my case here, I see the trunk folding in on itself. You can add springs to any set of vertices to hold things in place. The tip of the trunk flies around too much. I'lll create a couple new springs from the top all the way down to the tip. These springs will hold the overall shape in place without being too rigid.

Now lets see the result on the real mesh. Skin wrap the mesh with the spring mesh. I went back and added 2x more verts to the base spring mesh, then I redid the spring setup since the vertex count changed.

I then made the animation more sever, so I could show you adding a deflector to the springs. I used a scaled out sphere deflector to simulate collision with the right tusk. Now don't go trying to use the fucking UDeflector and picking all kinds of hi res meshes for it to collide with. That will lock up your machine for sure. Just because you can do something in 3dsmax, it doesn't mean you should do it.

So yeah, thats it. Now I'm not saying my elephant looks perfect, but you get the idea. Animate your point cache amount to even cross dissolve different simulations. Oh, and finally, stay away from the Enable Advanced Springs option. (If you want to see a vertex explosion, fiddle with those numbers a bunch.)

Fred Ruff

Fred Ruff

Just talked to Peter Watje about flex. Thought I would share his knowledge.

"The biggest issue is when your sim goes nuts turn down the stiffness, turn up your simulation type, and turn up samples."

He also mentioned that you can try using flex with FFD cages... interesting idea...

Thanks Peter

Sunday, January 9, 2011 at 1:23PM

Sunday, January 9, 2011 at 1:23PM  As a follow up to the article I wrote about render elements in After Effects, this article will go over getting render elements into The Foundry's Nuke.

As a follow up to the article I wrote about render elements in After Effects, this article will go over getting render elements into The Foundry's Nuke.

I've been learning Nuke over the last few months and I have to say it's my new favorite program. (Don't worry 3dsmax, I still love you too.) Nukes floating point core and it's node based workflow make it the best compositor for the modern day 3d artist to take his/her renderings to the next level. (In my opinion of course.) Don't get me wrong, After Effects still has a place in the studio for simple animation and motion graphics, but for finishing your 3d renders, Nuke is the place to be.

There a many things to consider before adding render elements into your everyday workflow. Read this article on render elements in After Effects before making that decision. You also might want to look over this article about linear workflow too.

Nuke and Render Elements

Drag all of your non gamma corrected, 16 bit EXR render elements into Nuke. Merge them all together and set the merge node to Plus. Nuke does a great job at handling different color spaces for images, and when dragging in EXR's, they will be set to linear by default.

Drag all of your non gamma corrected, 16 bit EXR render elements into Nuke. Merge them all together and set the merge node to Plus. Nuke does a great job at handling different color spaces for images, and when dragging in EXR's, they will be set to linear by default.

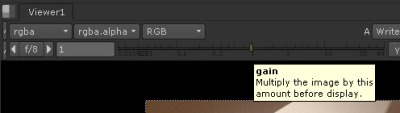

Nuke applies a color lookup in the viewport not at the image level, so our additive math for our render elements will be correct once we add all the elements together. (If it looks washed out, your renders probably have gamma baked into them from 3dsmax. Check your output gamma is set to 1.0 not 2.2) If you want to play with the viewport color lookup, grab the viewport gain or gamma sliders and play with them. Keep in mind that this will not change the output of your images saved from Nuke. This is just an adjustment to your display.

Alpha

After you add together all the elements, the alpha will be wrong again. Probably because we are adding something that isn't pure black to begin with. (My image has a gray alpha through the windows.) Drag in your background node and add another Merge node in Nuke. Set this one to Matte. Pull the elements into the A channel and pull the background into the B channel. If you do notice something through the Alpha it will probably look wrong. The easiest way to fix this is to grab the mask channel from the new Merge node and hook it up to any one of the original render elements. This will then get the alpha from that single node, without being added up.

After you add together all the elements, the alpha will be wrong again. Probably because we are adding something that isn't pure black to begin with. (My image has a gray alpha through the windows.) Drag in your background node and add another Merge node in Nuke. Set this one to Matte. Pull the elements into the A channel and pull the background into the B channel. If you do notice something through the Alpha it will probably look wrong. The easiest way to fix this is to grab the mask channel from the new Merge node and hook it up to any one of the original render elements. This will then get the alpha from that single node, without being added up.

Grading the Elements

That's pretty much it. You now can add nodes to each of the separate elements and adjust the look of your final image. If you read my article about render elements and After Effects, you will remember that I cranked the gain on the reflection element and the image started to look chunky. You can see here that when I put a Grade node on the reflection element and turn up the gain, I get better results. (NOTE: my image is grainy due to lack of samples in my render, not from being processed in 8 bit like After Effects does.)

That's pretty much it. You now can add nodes to each of the separate elements and adjust the look of your final image. If you read my article about render elements and After Effects, you will remember that I cranked the gain on the reflection element and the image started to look chunky. You can see here that when I put a Grade node on the reflection element and turn up the gain, I get better results. (NOTE: my image is grainy due to lack of samples in my render, not from being processed in 8 bit like After Effects does.)

This is just the beginning. Nuke has many other tools for working with 3d renders. I hope to cover more of them in later posts.

3ds max,

3ds max,  CGI,

CGI,  Compositing

Compositing  Tuesday, January 4, 2011 at 11:22AM

Tuesday, January 4, 2011 at 11:22AM Although I've known about render elements since their inception back in 3dsmax 4, I've only really been working with split out render elements for a couple years or so.

The idea seems like a dream right? Render out your scene into different elements and give control of different portions of the scene so that you can develop that final "look" at the compositing phase? However, as I looked into the idea at my own studio, it's not that simple. This is a history of my adventure of adapting render elements into my own workflow.

The Gamma Issue

The first question for me as a technical director is "Can I really put them back together properly?" I've met so many people who tried at one point, but got frustrated and gave up. Its a hassle and a bit of an enigma to get all the render elements back together properly. One of the main problems for me was that you can't put render elements back together if they have gamma applied to them. I had already let gamma into my life. I was still often giving compositors an 8bit image saved with 2.2 gammed from 3dsmax. So for render elements to work, I need to save out files without gamma applied.

Linear Workflow

Now that your saving images out of max without gamma, you don't want to save 8 bit files since the blacks will get crunchy when you gamma them up in composite. So you need to save your files as EXR's in 16 bit space for render elements to work. You also need to make sure no Gamma is applied to them. Read this post on Linear Workflow for more on that process.

Storage Considerations

With the workflow figured out, you are now saving larger files than you would on a old school 8 bit workflow. Also, since your splitting this into 5-6 render element sequences, your now saving more of these larger images. Make sure your studio IT guy knows you just significantly increased the storage need of your project by many times

Composite Performance

So now you got all those images saved on your network and you figured out how to put them back together in composite, but how much does this slow down your compositing process? Well if you are your own compositor, no problem. You know the benefits, and probably won't mind the fact that your now pulling do 5-6 plates instead of one. You have to consider if the speed hit is worth it. You should always have the original render so the compositor can skip putting the elements back together at all. (Comping one image is faster than comping 5-6.) I mean, If the compositor doesn't want to put them back together, and the director doesn't know he can ask to affect each element, why the hell are you saving them in the first place right? Also, if people aren't trained to work with them, they might put them back together wrong and not even know it. Finally, to really get them to work right in After Effects, you'll probably have to start working in 16 bpc mode. (Many plugins in AE don't work in 16 bit)

After all these considerations, it's really up to you and the people around you to decide if you want to integrate it into your workflow. It's best to practice it a few times before throwing it into a studios production pipeline. If you do decide to try it out, I'll go over the way that I've figured out how to save them out properly and how to put them back together in After Effects so that you can have more flexability in your composite process.

Setting up the Elements in After Effects

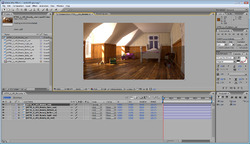

I don't claim to be any expert on this by far, so try to cut me some slack. I'll go over how I started working with render elements specifically in After Effects CS3. I'm using this attic scene as my example. It's a little short on refraction and specular highlights, but there are there, and they end up looking correct in the end.

Global Illumination, Lighting, Reflection, Refraction, and Specular.

I use just these five elements. Add Global Illumination, Lighting, Reflection, Refraction and Specular as your elements. It's like using the primary channels out of Vray. You can break up the GI into diffuse and multiply Raw GI, and the Lighting can be created from Raw Lighting and Shadows, but I just never went that deep yet. (After writing this post, I'll probably get back to it and see if I can get it working with all those channels as well. The bottom line is that this is an easy setup, so call me a cheater.

Sub Surface Scattering

I've noticed that if you use the SSS 2 shader in your renderings, you need to add that as another element. Also, it doesn't add up with the others so It won't blend back in. It will just lay over the final result.

I usually turn off the Vray frame buffer since I've had issues with the elements being saved out if that is on. I use RPManager and Dealine 4 for all my rendering and with the Vray frame buffer on, I've had problems getting the render elements to save out properly.

Bring them into After Effects

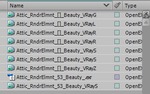

I'm showing this all in CS3. I'm working with Nuke more often and hope to detail my experience there too in a later post. Load your five elements into AE. As I did this, I ran into something that happens often in After Effects. Long file names. AE doesn't handle the long filenames that can be generated with working with elements. So learn from this and give your elements nice short names. Otherwise, you can't tell them apart.

I'm showing this all in CS3. I'm working with Nuke more often and hope to detail my experience there too in a later post. Load your five elements into AE. As I did this, I ran into something that happens often in After Effects. Long file names. AE doesn't handle the long filenames that can be generated with working with elements. So learn from this and give your elements nice short names. Otherwise, you can't tell them apart.

Before "Preserve RGB"Next make a comp for all the elements and all the modes to Add. With addition It doesn't matter what the order is. In the image to the left I've done that and laid the original result over the top to see if it's working right. It's not. The lower half if the original, the upper half is the added elements. The problem is the added gamma. After Effects is interpreting the images as linear and adding gamma internally.

Before "Preserve RGB"Next make a comp for all the elements and all the modes to Add. With addition It doesn't matter what the order is. In the image to the left I've done that and laid the original result over the top to see if it's working right. It's not. The lower half if the original, the upper half is the added elements. The problem is the added gamma. After Effects is interpreting the images as linear and adding gamma internally.  Adding Final GammaSo now when the are added back up, the math is just wrong. The way to fix this is to right click on the source footage and change the way it's interpreted. Set the interpretation to preserve the original RGB color. Once this is done, your image should now look very dark. Now that the elements are added back together we can apply the correct gamma once. (And only once, not 5 times.) Add an adjustment layer to the comp and add an exposure effect to the adjustment layer. Set the gamma to 2.2 and the image should look like the original.

Adding Final GammaSo now when the are added back up, the math is just wrong. The way to fix this is to right click on the source footage and change the way it's interpreted. Set the interpretation to preserve the original RGB color. Once this is done, your image should now look very dark. Now that the elements are added back together we can apply the correct gamma once. (And only once, not 5 times.) Add an adjustment layer to the comp and add an exposure effect to the adjustment layer. Set the gamma to 2.2 and the image should look like the original.

Dealing with Alpha

Next the alpha needs to be dealt with. The resulting added render elements always seem to have a weird alpha so I always add back the original alpha. One of the first issues is if your transparent shaders aren't setup properly. If your using Vray, set the refraction "Affect Channels" dropdown to All channels.

Next the alpha needs to be dealt with. The resulting added render elements always seem to have a weird alpha so I always add back the original alpha. One of the first issues is if your transparent shaders aren't setup properly. If your using Vray, set the refraction "Affect Channels" dropdown to All channels.

Pre-comp everything into a new composition. I've added a background image below my comp to show the alpha problem. The right side shows the original image, and the left shows the elements resulting alpha. So I add add one of the original elements back on top, and grab it's alpha using track matte. Note that my ribbed glass will never refract the background, just show it through with the proper transparency.

When this is all said and done, the alpha will be right and the image will look much like it did as a straight render. See this final screenshot.

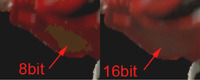

OK, remember why we were trying to get here in the first place? So we could tweak each element right? So lets do that.  8 bit Failing When AdjustedLets take the reflection element and turn up it's exposure for example. Select that element in the element pre-comp and add >Color Correct>Exposure. In my example, I cranked the exposure up to 5. This boosts the reflection element very high, but not unreasonable. However, since After Effects is in 8 bpc (B-its P-er C-hannel) you can see that the image is now getting crushed.

8 bit Failing When AdjustedLets take the reflection element and turn up it's exposure for example. Select that element in the element pre-comp and add >Color Correct>Exposure. In my example, I cranked the exposure up to 5. This boosts the reflection element very high, but not unreasonable. However, since After Effects is in 8 bpc (B-its P-er C-hannel) you can see that the image is now getting crushed.

So, now we need to switch the comp to 16 bpc. You can do that by holding ALT while clicking the 8bpc under the project window. Switch it to 16 bpc and everything should go back to normal. But note that were now comping at 16 bit and AE might be a bit slower than before. This is only a result of cranking on the exposure so hard. You can avoid this by doubling up the reflection element instead of cranking it with exposure. Keep in mind that many plugins don't work in 16 bit mode in after effects.

So, now we need to switch the comp to 16 bpc. You can do that by holding ALT while clicking the 8bpc under the project window. Switch it to 16 bpc and everything should go back to normal. But note that were now comping at 16 bit and AE might be a bit slower than before. This is only a result of cranking on the exposure so hard. You can avoid this by doubling up the reflection element instead of cranking it with exposure. Keep in mind that many plugins don't work in 16 bit mode in after effects.

That's about it for after effects. I'm curious how CS5 has changed this workflow, but we haven't pulled the trigger on upgrading to CS5 just yet. I'm glad because I've been investigating other compositors like Fusion and Nuke. I'm really loving how Nuke works and I'll follow this article up with a nuke one if people are interested in it.

Wednesday, December 15, 2010 at 7:25PM

Wednesday, December 15, 2010 at 7:25PM These days, most shops are using a linear workflow for lighting and rendering computer graphics. If you don't know what that means, read on. If you do know, and want to know more about how to do it specifically in 3dsmax, read on as well.

Why use a linear workflow?

First thing about linear workflow is that It can actually make your CG look better and more realistic. Do I really need to say anymore? If that doesn't convince you, the second reason is so you can composite your images correctly . (Especially when using render elements.) Also, it gives you more control to re-expose the final without having to re-render all your CG. And finally, many SLR cameras and Digital cameras are now supporting linear images so it makes sense to keep in linear all throughout the pipeline.

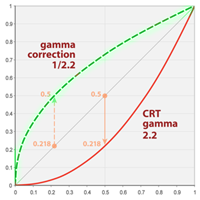

A bit on Gamma

Lets start with an example of taking a photo on a digital camera. You take a picture, you look at it, it looks right. However, you should know that the camera encoded a 2.2 gamma curve into the image already. Why, because they are taking into account the display of this image on a monitor. A monitor or TV is not a linear device. So the camera maker applies a gamma curve to the image to compensate. A gamma encoded curve looks something like this. The red curve shows how a monitor handles the display of the image. Notice the green line which is 2.2 gamma. It's directly opposite to the monitor display, and when they combine, we get the gray line in the middle. So gamma is about compensation. Read more on gamma here.

Lets start with an example of taking a photo on a digital camera. You take a picture, you look at it, it looks right. However, you should know that the camera encoded a 2.2 gamma curve into the image already. Why, because they are taking into account the display of this image on a monitor. A monitor or TV is not a linear device. So the camera maker applies a gamma curve to the image to compensate. A gamma encoded curve looks something like this. The red curve shows how a monitor handles the display of the image. Notice the green line which is 2.2 gamma. It's directly opposite to the monitor display, and when they combine, we get the gray line in the middle. So gamma is about compensation. Read more on gamma here.

The problem comes in when you start to apply mathematics to the image. (like compositing or putting render elements back together) Now, the mathematics are off since the original image has been bent to incorporate the gamma. So, the solution is to work with the original linear space images, and apply gamma to the result, not the source. NOTE: Linear images look darker with high contrast until you apply the display gamma to it. TV's use a gamma of 2.2.

The problem also comes with computer graphics generated imagery. All of CG is essentially mathematics, and for years many of us have just delt with this. However, now that most renderers can simulate global illumination, the problem is compounded. Again, the solution is let the computer work in linear space, and bent only the final results.

Why we use 16 bit EXR's

So, now that we know we have to work with linear images, lets talk about how. Bit depth is the first problem. Many of us were using 8 bit images for years. I swore by targas for so long, mainly cause every program handled them the same. Targa's are 8 bit images with color in a space of 0-255. So if you save the data with only 255 levels of each R-G-B color and alpha, when you gamma the image after, the dark end of the spectrum it will be "crunchy" since there wasn't much data in there to begin with. NOw that very little data has been stretched. Here's where 16 bit and 32 bit images come into play. You could use any format of storage that support 16 bit. 16 bit is plenty for me, 32 is a bit of depth overkill and makes very large images. Then you can adjust the resulting images with gamma and even exposure changes without those blacks getting destroyed. EXR's seem to be the popular format since it came out of ILM and has support for multiple channels. It also has some extended compression options for per scanline and zipped scanline storage so it can be read faster.

So, now that we know we have to work with linear images, lets talk about how. Bit depth is the first problem. Many of us were using 8 bit images for years. I swore by targas for so long, mainly cause every program handled them the same. Targa's are 8 bit images with color in a space of 0-255. So if you save the data with only 255 levels of each R-G-B color and alpha, when you gamma the image after, the dark end of the spectrum it will be "crunchy" since there wasn't much data in there to begin with. NOw that very little data has been stretched. Here's where 16 bit and 32 bit images come into play. You could use any format of storage that support 16 bit. 16 bit is plenty for me, 32 is a bit of depth overkill and makes very large images. Then you can adjust the resulting images with gamma and even exposure changes without those blacks getting destroyed. EXR's seem to be the popular format since it came out of ILM and has support for multiple channels. It also has some extended compression options for per scanline and zipped scanline storage so it can be read faster.

Does 3dsmax apply gamma?

Not by default. 3dsmax has had gamma controls in the program since the beginning, but many people don't understand why or how to use it. So what you've probably been working with and looking at is linear images that are trying to look like gamma adjusted images. And your trying to make your CG look real? I bet your renderings always have a lot of contrast, and your probably turning up the GI to try and get detail in the blacks.

Setting up Linear Workflow in 3dsmax

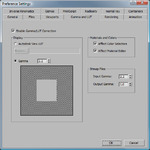

Setup 3dsmax Gamma

First, let' set up the gamma in 3dsmax. Start at the menu bar, Customize>Preferences and go to the Gamma and LUT tab. (LUT stand for Look Up Table. You can now use custom LUT's for different media formats like film.) Enable the gamma option and set it to 2.2 (Ignore the gray box inside the black and white box.) Set the Input gamma to 2.2. This will compensate all your textures and backgrounds to look right in the end. Set the Output gamma to 1.0 This means we will see all our images in max with a gamma of 2.2, but when we save them to disk, they will be linear. While your here, check the option in Materials and Color Selectors since we want to see what were working with. That's pretty much it for max. Now lets talk about how this applies to Vray.

First, let' set up the gamma in 3dsmax. Start at the menu bar, Customize>Preferences and go to the Gamma and LUT tab. (LUT stand for Look Up Table. You can now use custom LUT's for different media formats like film.) Enable the gamma option and set it to 2.2 (Ignore the gray box inside the black and white box.) Set the Input gamma to 2.2. This will compensate all your textures and backgrounds to look right in the end. Set the Output gamma to 1.0 This means we will see all our images in max with a gamma of 2.2, but when we save them to disk, they will be linear. While your here, check the option in Materials and Color Selectors since we want to see what were working with. That's pretty much it for max. Now lets talk about how this applies to Vray.

Setting up Gamma for Vray

You really don't have to do anything to Vray to make it work, but you can do a couple things to make it work better. First off, many of Vray's controls for GI and anti-aliasing are based on color thresholds. It analyzes the color difference between pixels and based on that does some raytracing stuff. Now that we just turned on gamma of 2.2 we will start to see more noise in our blacks. Let's let Vray know that were are in linear space and have it "Adapt" to this environment.

You really don't have to do anything to Vray to make it work, but you can do a couple things to make it work better. First off, many of Vray's controls for GI and anti-aliasing are based on color thresholds. It analyzes the color difference between pixels and based on that does some raytracing stuff. Now that we just turned on gamma of 2.2 we will start to see more noise in our blacks. Let's let Vray know that were are in linear space and have it "Adapt" to this environment.

Vray has it's own equivalent of exposure control called Color Mapping. let's set the gamma to 2.2 and check the option, "Don't affect colors (adaptation only)". This will tell Vray to work in linear space, and now our default color thresholds for anti-aliasing and GI don't need to be drastically changed. Sometimes when Im working on a model or NOT rendering for composite, I turn off the "Don't affect colors)" which means that I'm encoding the 2.2 and when I save the file as a JPG or something, it will look right. (This easily confuses people so stay away from switching around on a job.)

Vray Frame Buffer

I tend to almost always use the Vray frame buffer. I love that it has a toggle for looking at the image with the view gamma and without. (Not to mention the "Track Mouse while Rendering" tool in there.) The little sRGB button will apply a 2.2 gamma to the image so you can look at it in gamma space while the rendering is still in linear space. Here is just an example of the same image with and without 2.2 gamma. Notice the sRGB button in the bottom of these images.

This asteroid scene is show without the gamma, and with a 2.2 gamma. Try doing that to an 8 bit image. There would hardly be any information in the deep blacks. With a linear image, I can now see the tiny bit of GI in the asteroid darker side.

Pitfalls...

Vray'sLinear Workflow Checkbox

I'm referring to the check box in the Color Mapping controls of the Vray Renderer. Don't use this. It's misleading. It's used to take an older scene that was lit and rendered without gamma in mind and does an inverse correction to all the materials. Investigate it if your re-purposing an older scene.

Correctly Using Data Maps (Normal Maps)

Now that we told max to adjust every incoming texture to compensate for this monitor sillyness we have to be careful. For example, now when you load a normal map, it will try and apply a reverse gamma curve to the map, which is not what you want. This will make your renderings look really fucked up if they are gammed compensated. Surface normals will point the wrong way. To fix this, always set the normal map image to a predefined gamma of 1.0 when loading it. I'm still looking for discussion about if reflection maps and other data maps should be set to 1.0. I've tried it both ways, and unlike normals, which are direction vectors, reflection maps just reflect more or less based on gamma. It makes a difference, but it seems fine.

Now that we told max to adjust every incoming texture to compensate for this monitor sillyness we have to be careful. For example, now when you load a normal map, it will try and apply a reverse gamma curve to the map, which is not what you want. This will make your renderings look really fucked up if they are gammed compensated. Surface normals will point the wrong way. To fix this, always set the normal map image to a predefined gamma of 1.0 when loading it. I'm still looking for discussion about if reflection maps and other data maps should be set to 1.0. I've tried it both ways, and unlike normals, which are direction vectors, reflection maps just reflect more or less based on gamma. It makes a difference, but it seems fine.

Always Adopt on Open

I've also taken on the ritual of always taking on the Gamma of the scene I'm loading. Always say yes, and you shouldn't have problems jumping from job to job scene to scene.

Hope that helps to explain some things, or at least starts the process of understanding it. Feel free to post questions since I tend to try to keep the tech explanations very simple. I'll try post a follow up article on how to use linear EXR's in After Effects

Friday, December 3, 2010 at 11:41AM

Friday, December 3, 2010 at 11:41AM

Lighting and Rendering

Material

Don't get me wrong. Max's hair and fur is a pretty much a pain in the ass and really should delt with. I'm now using Hair Farm like I mentioned above, and the difference is worlds apart. Hair farm is fast, robust and the results look very nice. (Speed is king in look development. if you can render it fast, you can render it over and over, really working with the materials and lighting to make it look the way you want.)

Saturday, November 13, 2010 at 8:26PM

Saturday, November 13, 2010 at 8:26PM Wow, that title sounds like a fancy Siggraph paper...

One of my favorite parts of 3ds Max is the Particle Flow system. Maybe because I had a hand in designing it, or maybe because Oleg over at Orbaz Technologies is brilliant?

The year was 2001...

3dsmax was in desperate need of a new particle system. Autodesk,or was it Kinetix, no wait, Autodesk Multimedia, no wait, discreet? Shit, I don't remember. (And I don't care anymore.) Anyway, they hired the right man for the job. Oleg Bayborodin. Oleg had had some previous experience with particles and wanted to take on building an event driven particle system.

Here's where I come in. I designed the entire sub-system architecture! (Wow I can't even lie very well.) Just kidding. Oleg designed and built the whole whole thing. My job, was to consider the user experience, assist in UI design and provide use cases for how the tool was going to be used.

Particle Flow Design Analogy

I used to be love experimenting with electronics as a kid, and when I saw the design of particle flow, It reminded of electronic schematics. (I built my share of black boxes with blinking LED's in them, pretending they were "bombs", or hi tech security devices.) So I ran with that as an analogy. Recently I came across some of the original designs and images that inspired the particle flow UI design.

Schematic Inspirations

Schematic Inspirations

We then started to apply it to these particle events, which were more like integrated circuits than transistors and resistors. We used a simple flow chart tool to design them and that started to look like this.

From there, the design started to look more like what we see today.

Unfortunately, since we were building a new core system from scratch, some of the use cases couldn't be achieved with this first incarnation of the system. We only time to do so many operators and tests. Bummer. But everyone figured we'd get to a second round of particle operators in the next release of max, so no big deal. However, Autodesk did some restructuring and a few engineers were let go and we were left with a great core, and no one to build on it.

Luckily, Oleg went on to create a series of Particle Flow extension packs and I hope he's making a good living off them. So let's look at using Particle Flow in production.

Particle Wheat Field for Tetra Pak

Here's a fun commercial. Let's go over some of the ways particle flow was used in this spot for Tetra Pak. First, let's take a look at the spot.

I was asked to create a field of wheat that could be cut down, sucked up into the air, and re-grown. It seems so simple to me now, but when you think about it, that's a pretty tall order. I went directly to a particle system due to the overall number of wheat stalks. (Imagine animating this by hand!)

At first I create a 3d wheat stalk and instanced it as particles. This was a great start, but it looked very fake and CG, and since I modeled each wheat grain (Or whatever you call individual wheats) the geometry was pretty heavy. Other issues were having the wheat grow, get cut and re-grow. As I tried an animated CG wheat stalk as an animated instance mesh particle, but this brought the system to a crawl. I quickly realized that a "card" system would work much better. Also, Bent has many stop motion animators so we thought we would lean on our down shooter and an animator. This worked great. We now had a sequence of animated paper wheat stalks that could grow by simply swapping out the material per frame. (did you get all that?)

Blowing in the wind was also a tricky thing at first. I thought I might be able to slap a bend modifier on the card and have the particles randomize the animation per particle, but thatwon't work. They won't move like a field of wheat. Instead, each particle would have a randomizing bend animation. Instead, I had to use lock\bond, part of particle flow box 1. (Now part of 3ds Max) This worked really good. The stalked waved from the base, not exactly what I wanted, but it worked good enough.

Wheat stalk shadows were my next problem. Since I was now using cards with opacity, my shadows were shadows of the cards, not the alpha images. They only way to deal with this was brute force. I had to switch over to ray traced shadows. At this time I split up the wheat field into 4 sections. This allowed me to render each section and not blow out my render farm memory.

From here, the cutting of wheat was a simple event, along with growing the wheat back. Much of the work was in editing the sequence of images on the cards. The final system looked like this.

The Falling Bread

The second problem I ran into on this job was when the bread falls on Bob. (That's the bunny's name) At first I tried to use max's embedded game dynamics system, reactor. I knew this would fail, but I always give things a good try first. It did fail. Terribly. Although I did get a pile of objects to land on him, they jiggled and chattered and would never settle. Not just settle in a way i like, but settle at all.

Particle Flow Box #2 had just been released. This new extension pack for particle flow allows you to take particles into a dynamic event where simulations can be computed. Particle Flow Box#2 uses Nvidia's PhysX dynamics system, which proved to be very usable for production animation. (PhysX dynamics are now available for 3ds Max 2011 as an extension pack for subscription users.)

With Box#2 installed, making the bread drop was so easy. There really isn't much to say about it. Box #2 has a default system that drops things so I was able to finish the simulation in a day or so, tweaking some bounce and friction settings. I used a small tube on the ground to contain the bread so that the it would "pile up" a bit around the character.

Schooling Fish

A shot of the Sardine ModelTo show just one more example of how flexible Particle Flow really is, here's a spot I worked on where I used Particle Flow to control a school of sardines for the Monterey Aquarium. I should mention that I only worked on the sardines in the first spot, and all of these spots were done by Fashion Buddha.

A shot of the Sardine ModelTo show just one more example of how flexible Particle Flow really is, here's a spot I worked on where I used Particle Flow to control a school of sardines for the Monterey Aquarium. I should mention that I only worked on the sardines in the first spot, and all of these spots were done by Fashion Buddha.

3ds Max,

3ds Max,  Particle Flow,

Particle Flow,  Tetra Pak in

Tetra Pak in  3ds max,

3ds max,  CGI

CGI